How to train a Stable Diffusion model using Google Collab and NFT art.

Let's Doodle

I was goofing off last night creating Stable Diffusion models with the Google Collab below and figured I should write my steps down before I forget. In this process, we will take some known good NFT art and train up a model so that we can create more art in the same style.

First we will need some art.

I am a fan of the Doodles project and love their art style. So we will be using their Space Doodles project to make something new.

Most NFTs are stored on IPFS, so we just need a link to the images to download and prep them for training. I am using the Alchemy API to get the metadata from the Ethereum blockchain for each NFT. I then grab them with the requests module in Python and resize them with the Pillow module. Images for training only need to be 512x512 and Space Doodles are 1800x1800, so I needed to downsize them.

Here we go:

Get the images with Python and the Alchemy API

Docs for the Alchemy NFT API used in the script below:

https://docs.alchemy.com/reference/getnftsforcollection

Grab an account and setup an app on the proper blockchain [Ethereum]

https://dashboard.alchemy.com/

We will be getting the Space Doodles info and images:

https://opensea.io/collection/space-doodles-official

Contract address:

0x620b70123fB810F6C653DA7644b5dD0b6312e4D8

Download and Prep images using a Python script:

import os

import requests

from PIL import Image

ALCHEMY_API_KEY = os.getenv('ALCHEMY_API_KEY')

CONTRACT_ADDRESS = '0x620b70123fB810F6C653DA7644b5dD0b6312e4D8'

NFT_COLLECTION_NAME = 'Space Doodles'

NFT_IMG_DIR = './images'

def get_nfts_from_collection(collection_address):

"""

Returns NFT data from give collection address

"""

url = f"https://eth-mainnet.alchemyapi.io/nft/v2/{ALCHEMY_API_KEY}/getNFTsForCollection/" \

f"?contractAddress={collection_address}&withMetadata=true"

data = requests.get(url).json()

return data

def main():

""" Get ~40 NFTS, download their images to local directory """

# Get contract and NFT data

nft_data = get_nfts_from_collection(CONTRACT_ADDRESS)

# Loop over each NFT to get the name and image

for nft in nft_data['nfts'][:40]:

# get the IPFS gateway URL

img_url = nft['media'][0]['gateway']

# get the image

nft_img_data = requests.get(img_url)

# format the image name

nft_name = nft['title'].replace('#','').replace(' ', '_')

# add mime type

img_name = f"{nft_name}.png"

# save image

open(f"{NFT_IMG_DIR}/original/{img_name}", "wb").write(nft_img_data.content)

# resize to 512x512

# Note: Space Doodles are 1800x1800, so the resize uses math to

# get them to 512x512

try:

im = Image.open(f"{NFT_IMG_DIR}/original/{img_name}")

resized_im = im.resize((round(im.size[0]*0.2845), round(im.size[1]*0.2845)))

resized_im.save(f"{NFT_IMG_DIR}/prepped_images/{img_name}")

except PIL.UnidentifiedImageError:

# for some it may fail to read the image

print(f"Failed to read image: {img_name}")

# let operator know we saved a file

print(f'Saved: {img_name}')

print("DONE")

if __name__ == '__main__':

main()

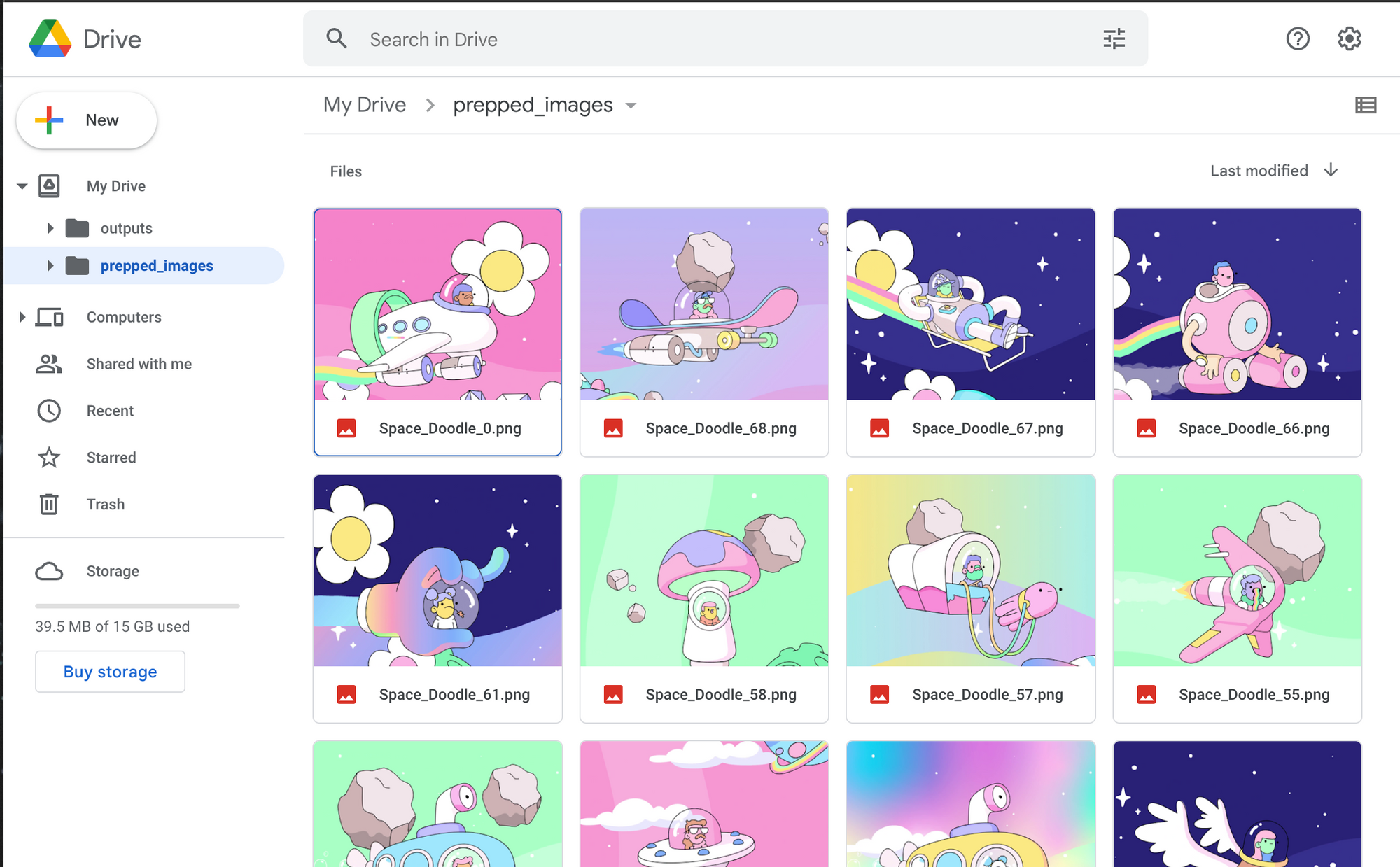

Once done there will be a prepped_images folder you can upload to your Google Drive.

Upload images to Drive

Training Day

Follow the instructions in my other article on how to setup a Google Colab [It has pictures]

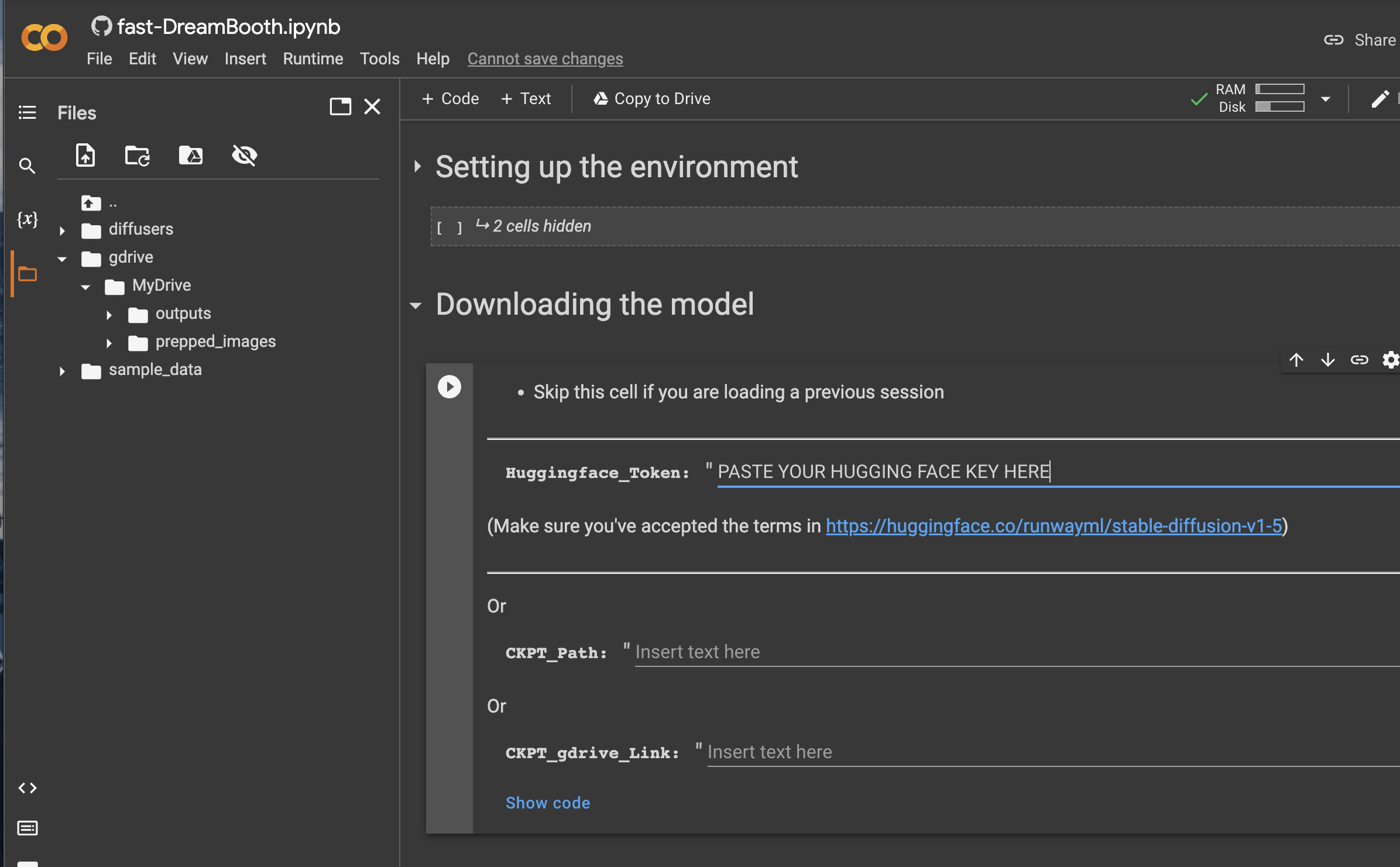

Open the Jupyter Notebook in Google Collab:

https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb

Setup your instance, connect to Gdrive, and then setup the environment.

Get your token from HuggingFace

https://huggingface.co/settings/tokens

Then download the model

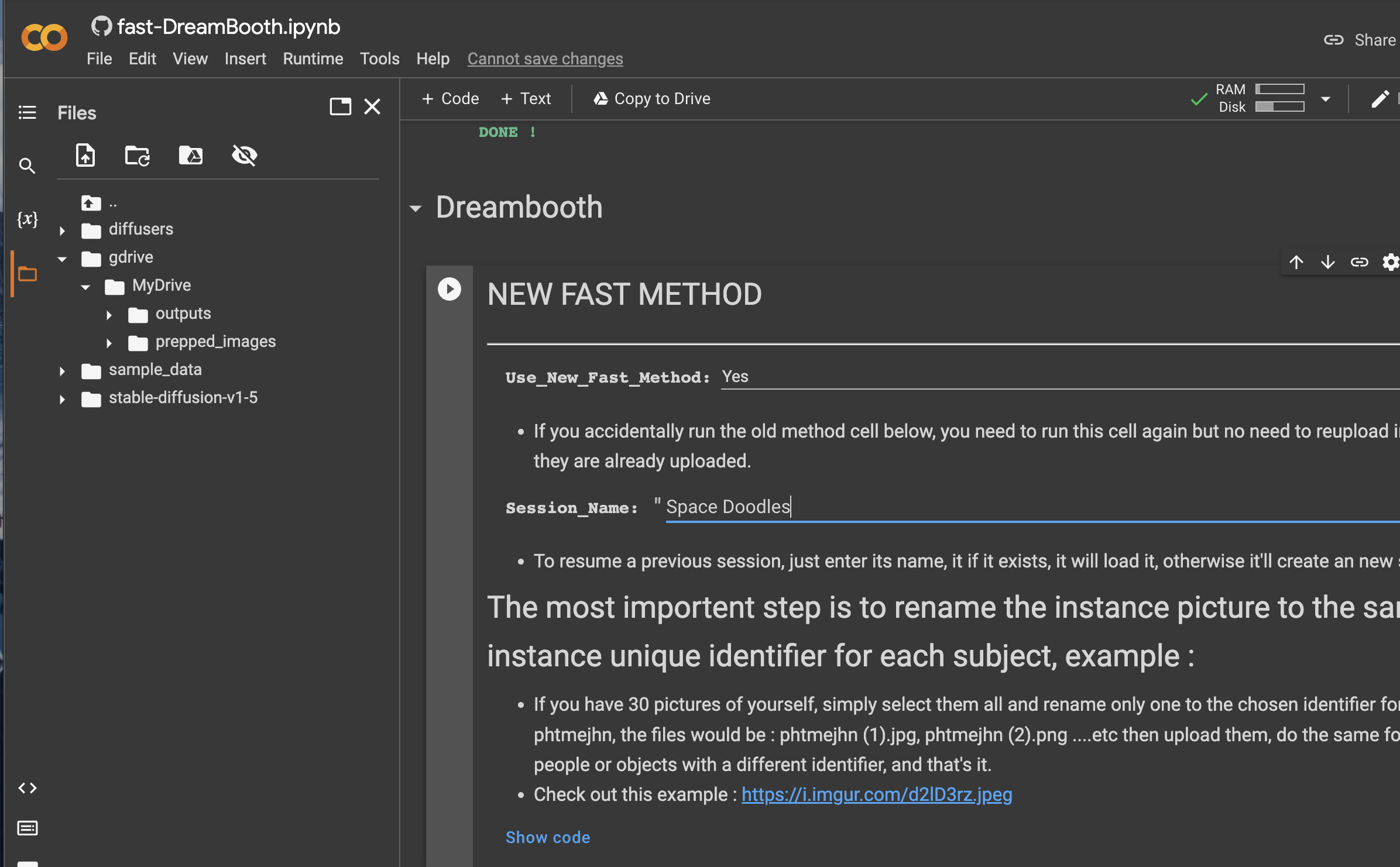

Use the Fast Method

Call your session the same name you'd use in your art prompt

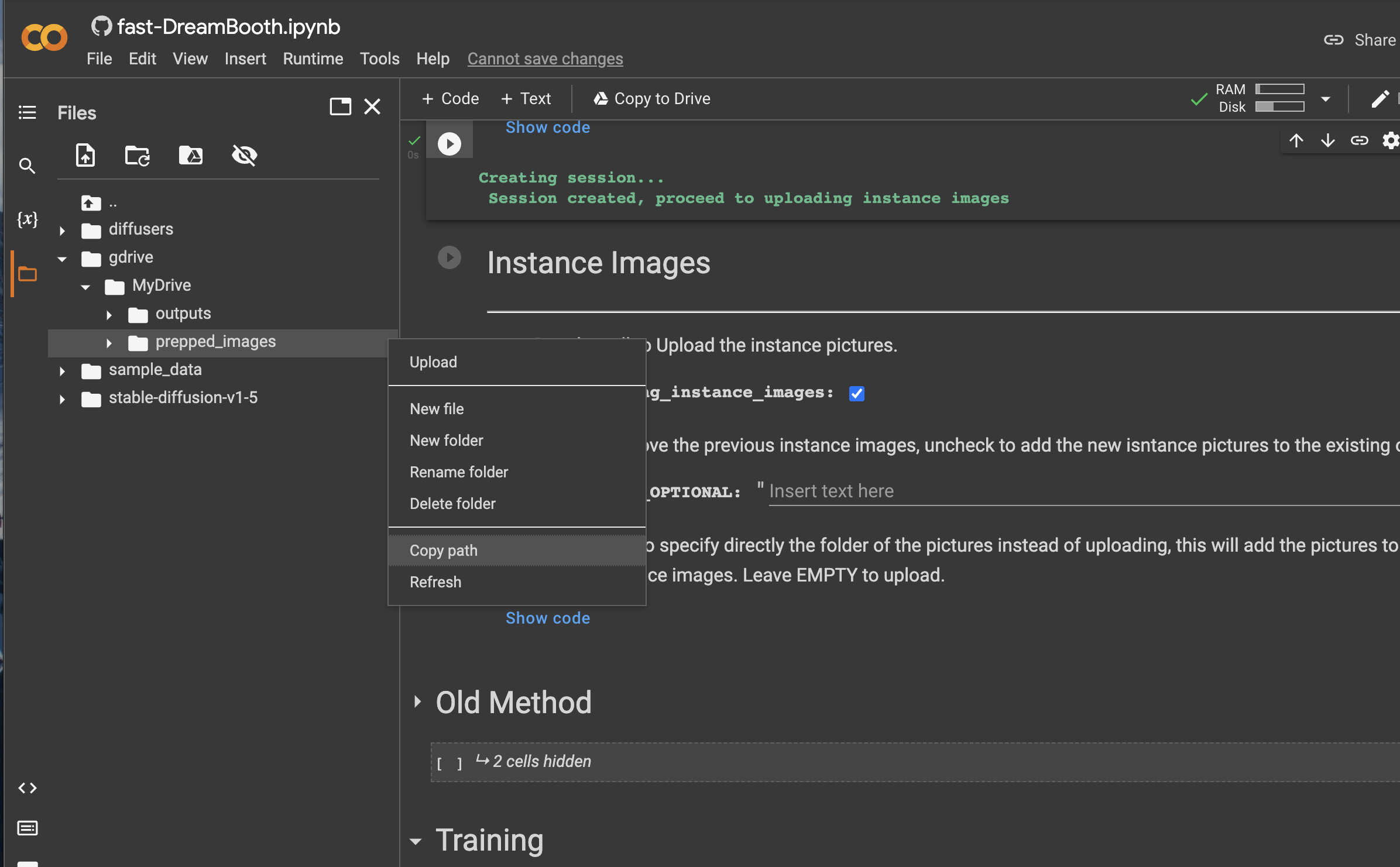

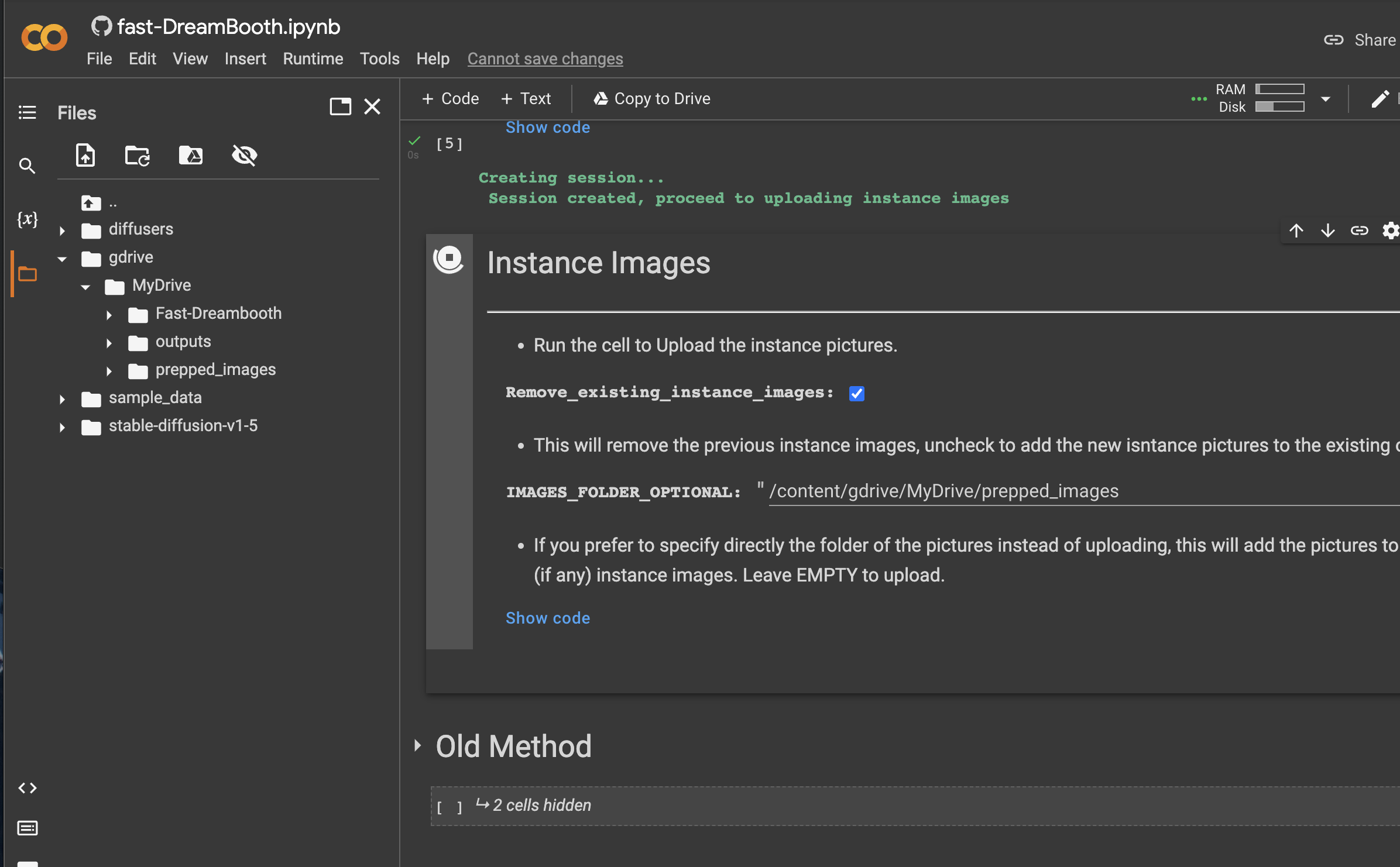

Point the notebook at the right set of images. Copy the path of your images.

Paste that path into the IMAGES_FOLDER_OPTIONAL field.

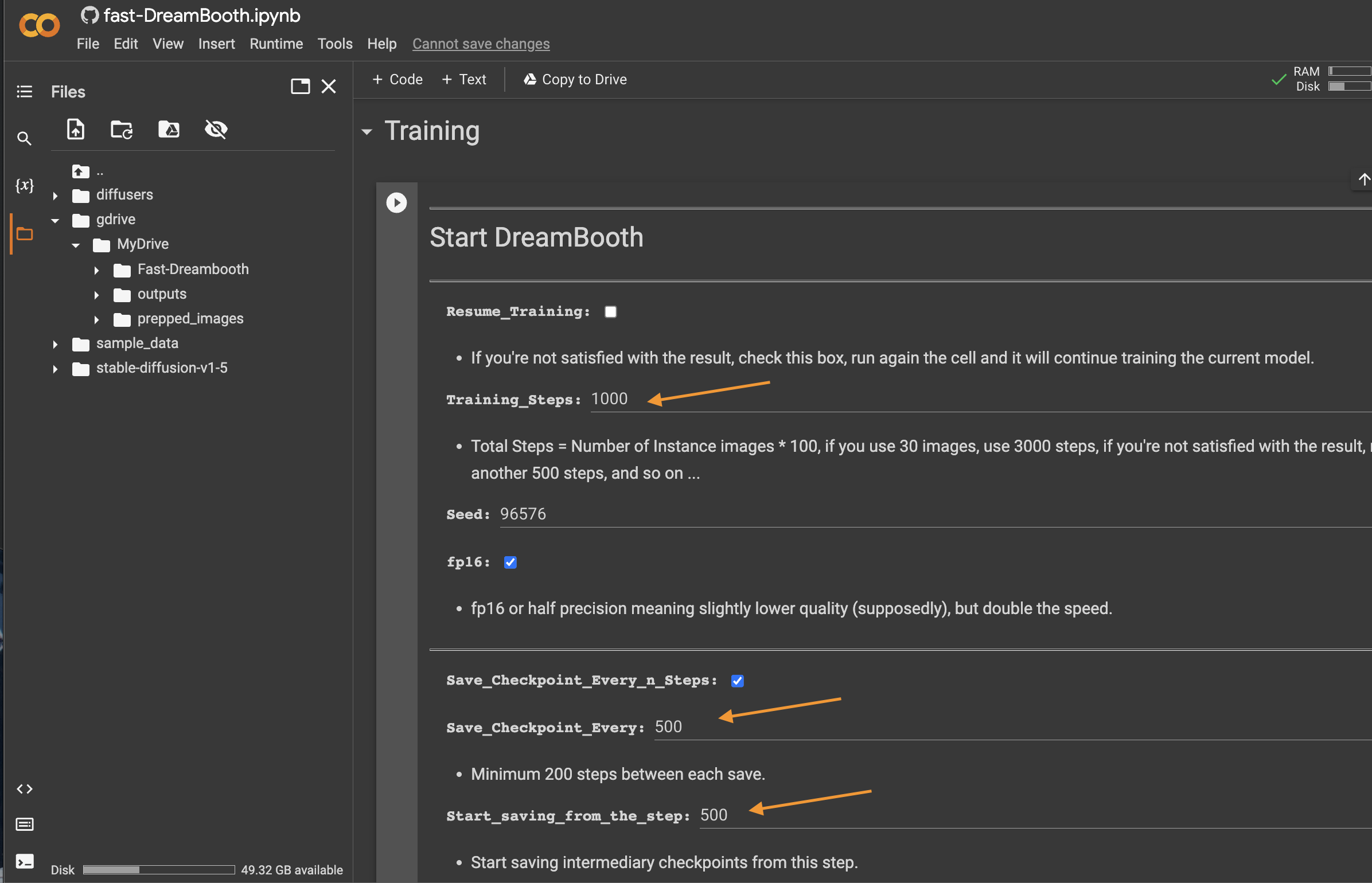

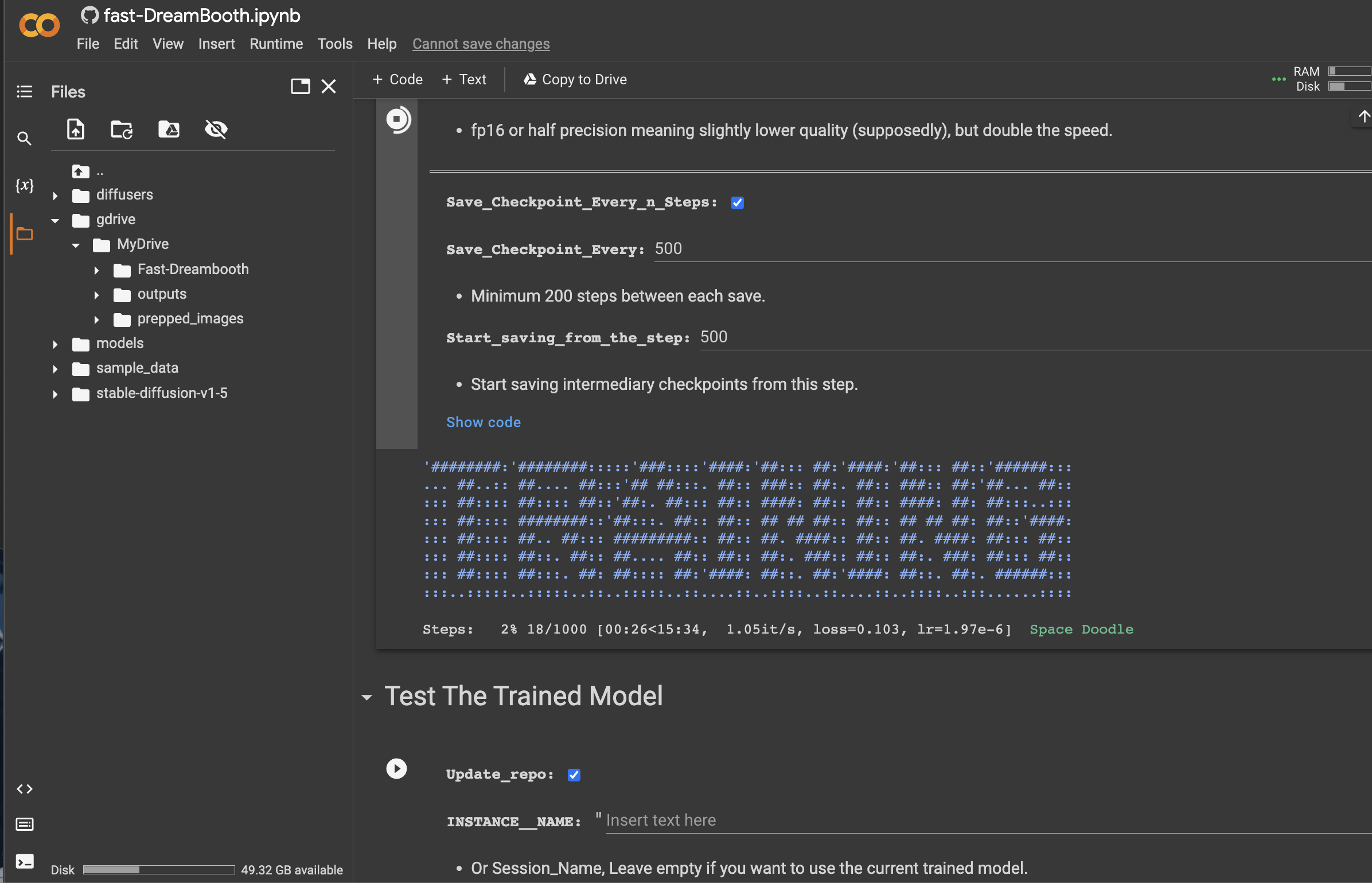

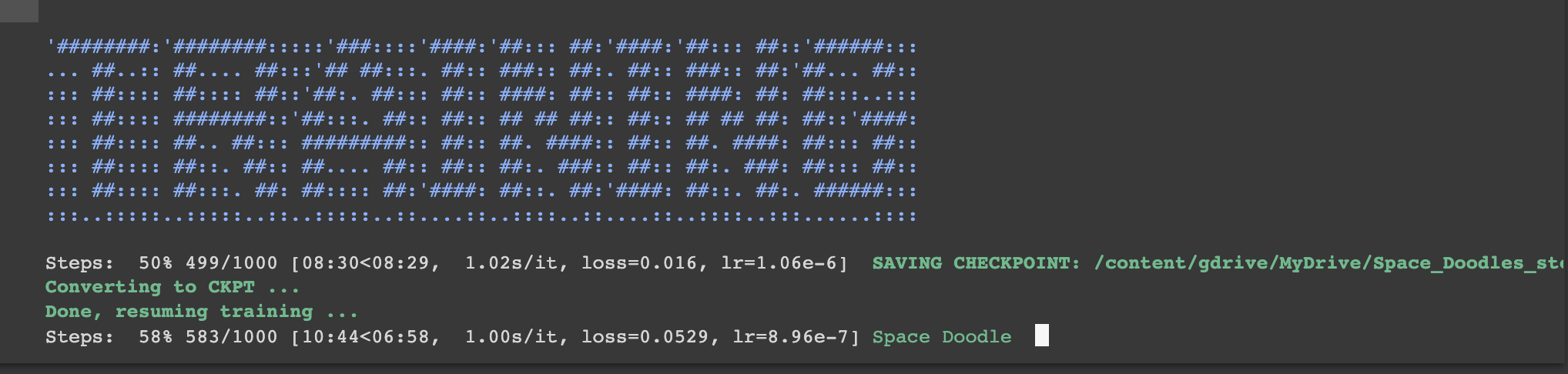

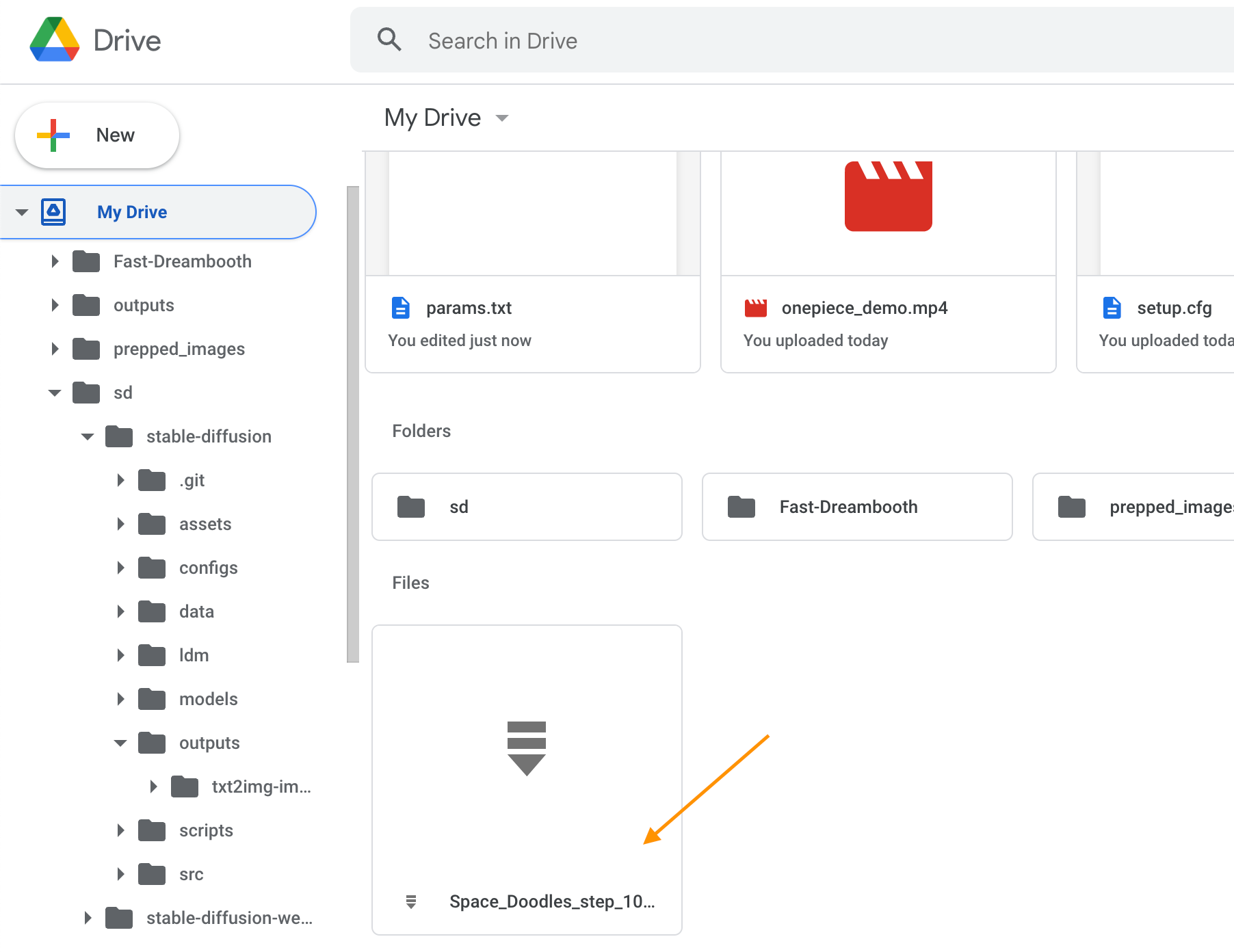

Train your model - 1000 steps with checkpoints at 500

- The 500 step checkpoint was at about 10 minutes for me so I just let it run.

- You can download your 1/2 way checkpoint if you'd like to test it to see

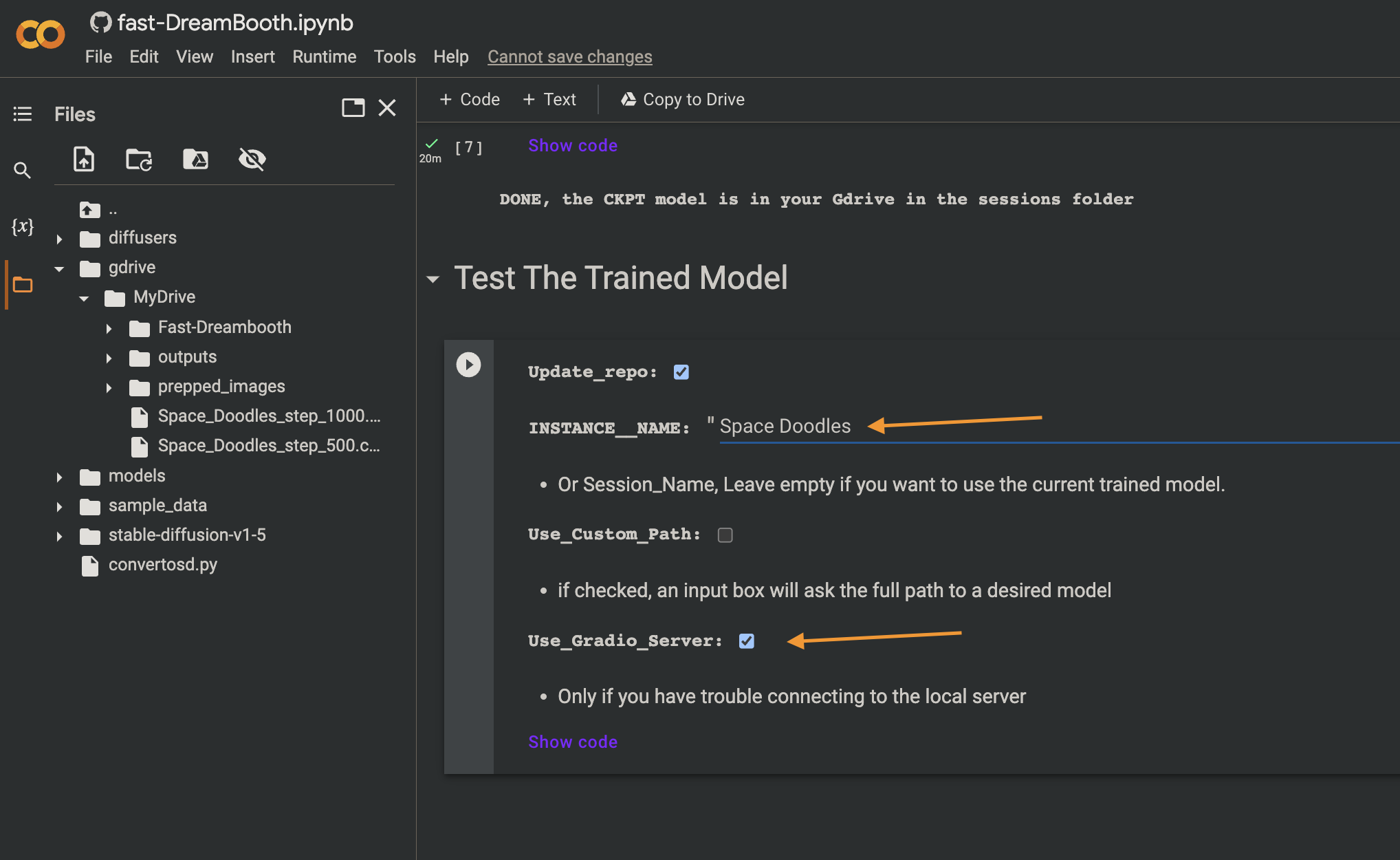

how it is going. - 1000 steps took about 20 minutes, it will automatically stop and then you will have a final checkpoint in your Gdrive.

- If your model is not working you may need more steps 3000 is the default.

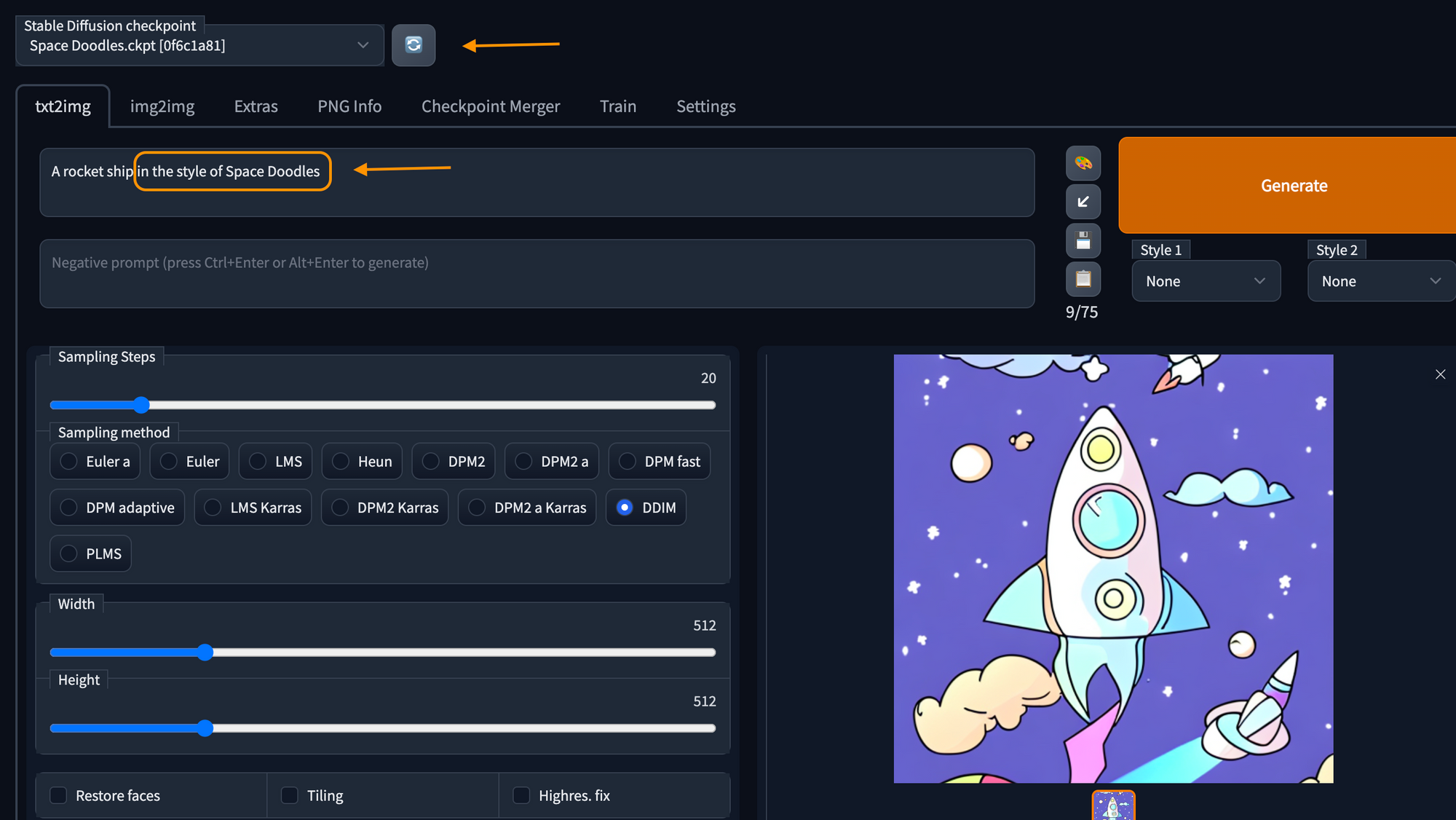

Test your model with Stable Diffusion

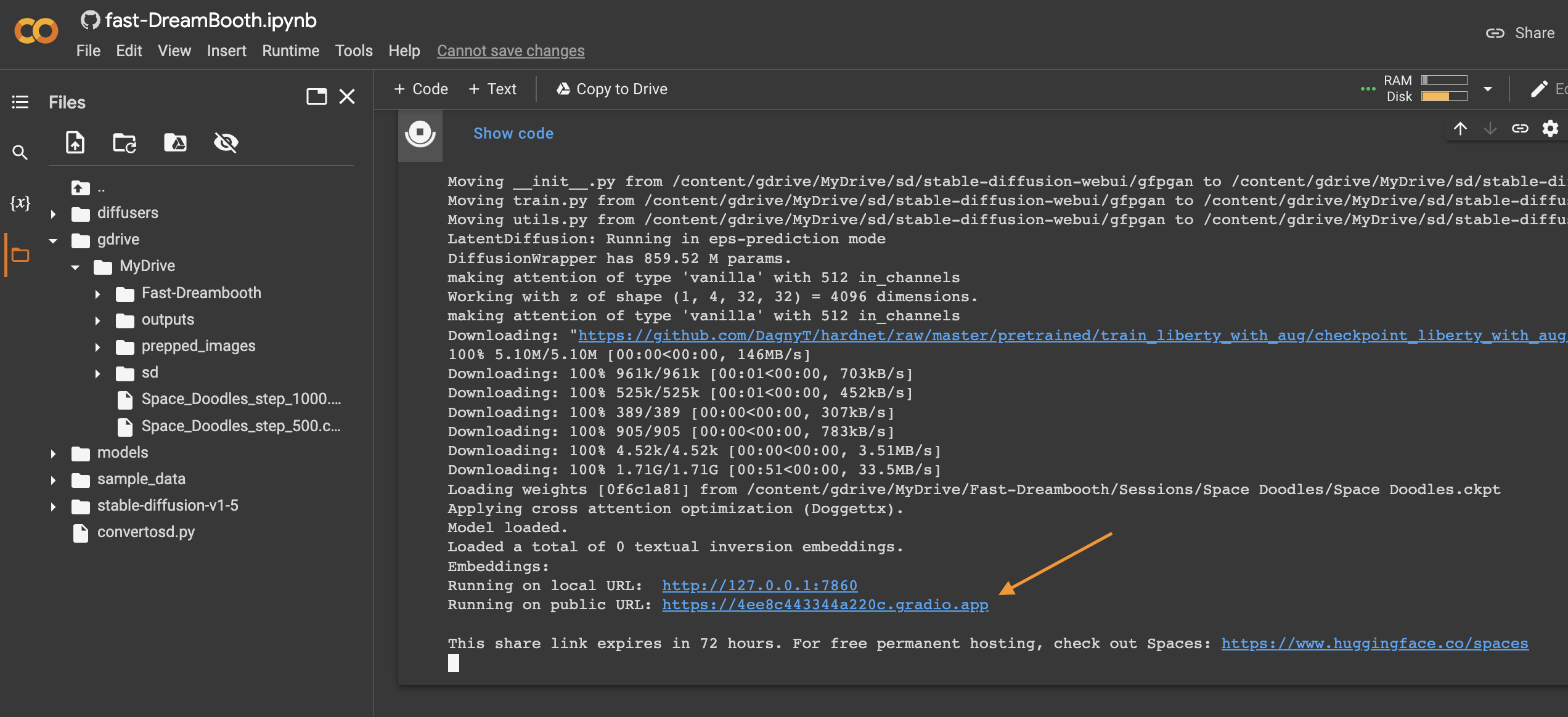

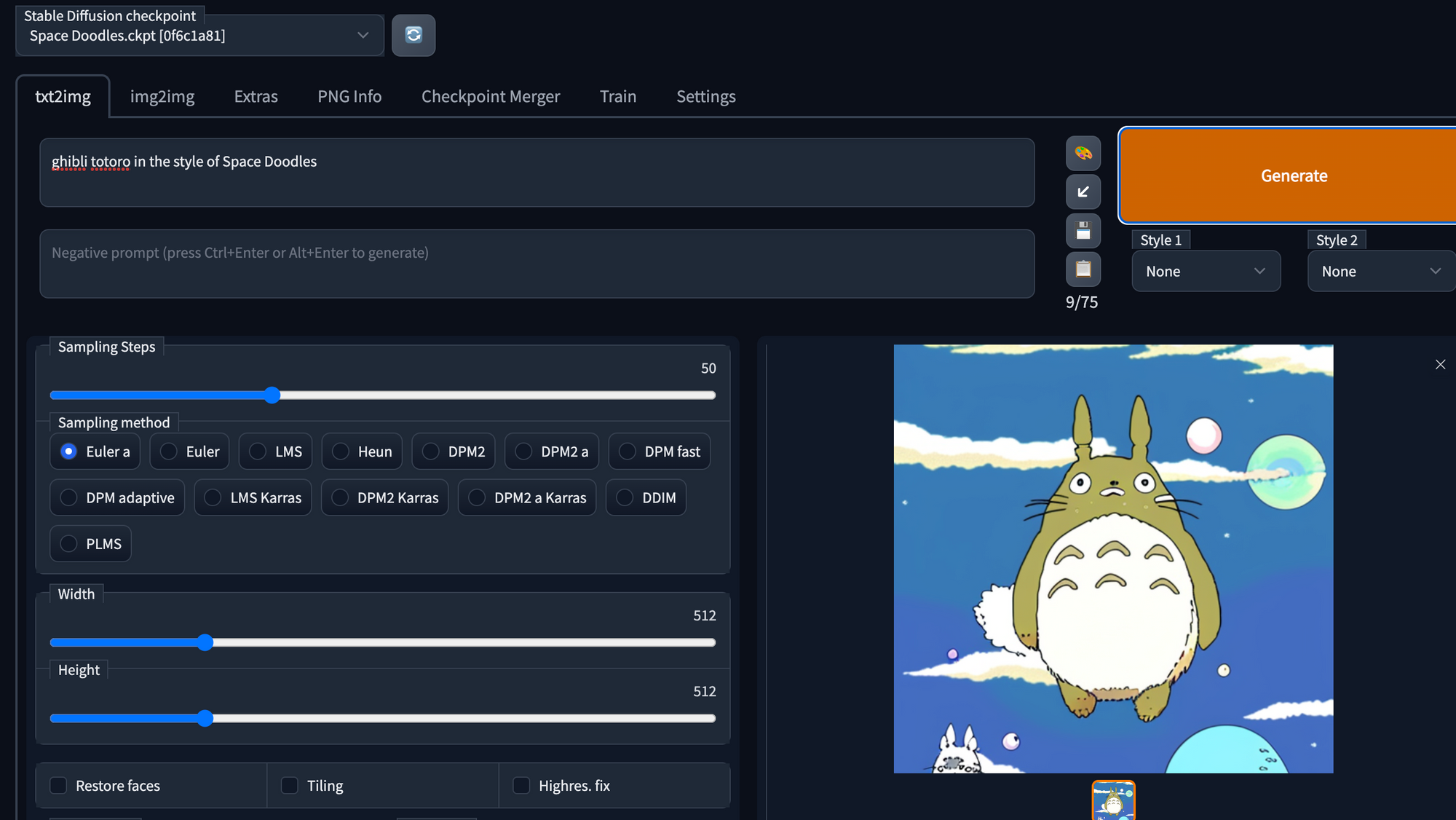

Give your instance name, and then check Gradio, so we get a fun web UI

Once loaded you will get a link to the Gradio website to work with your new model.

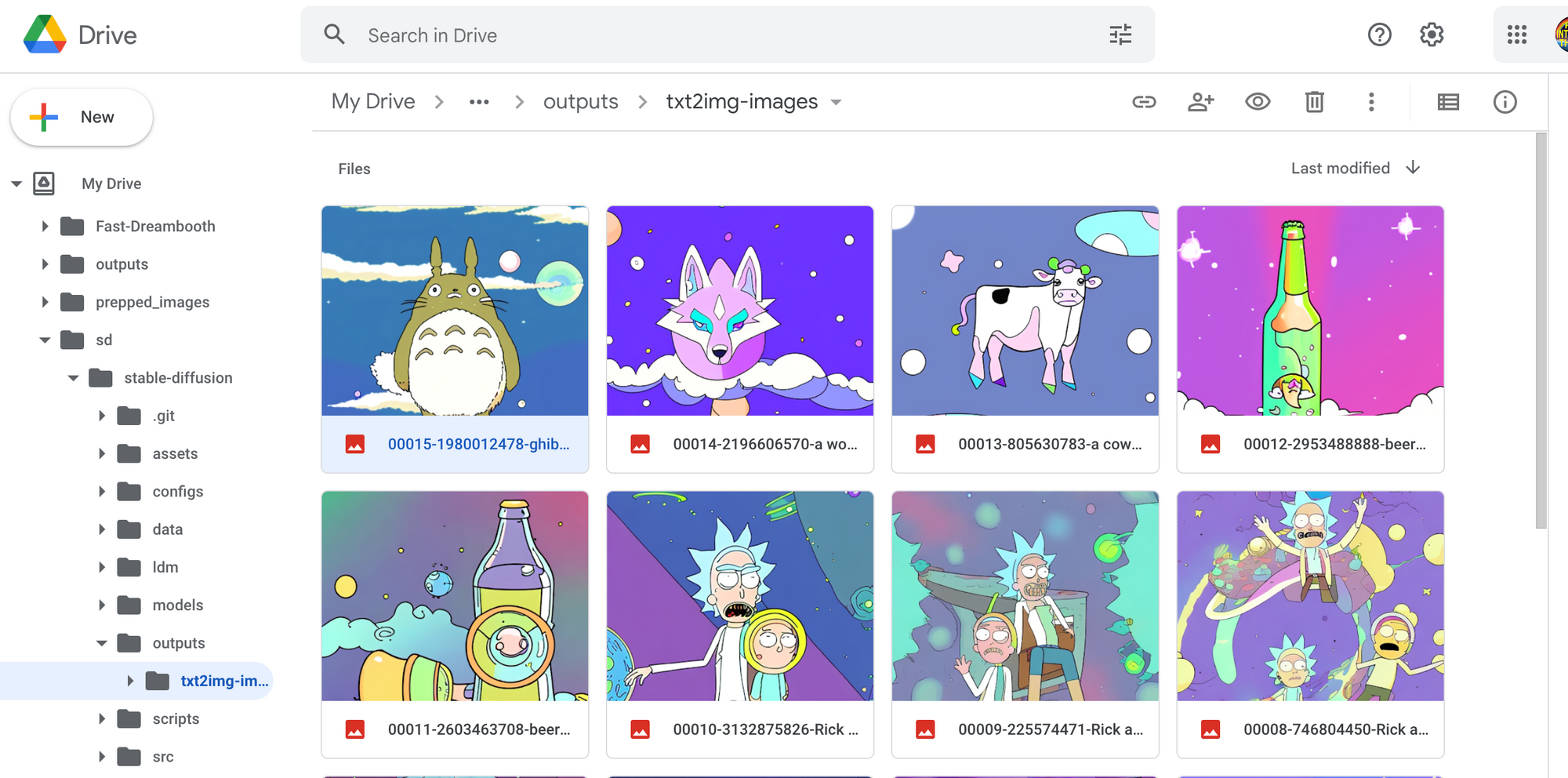

Generate some images:

A rocket ship in the style of Space Doodles

Pretty close, eh?

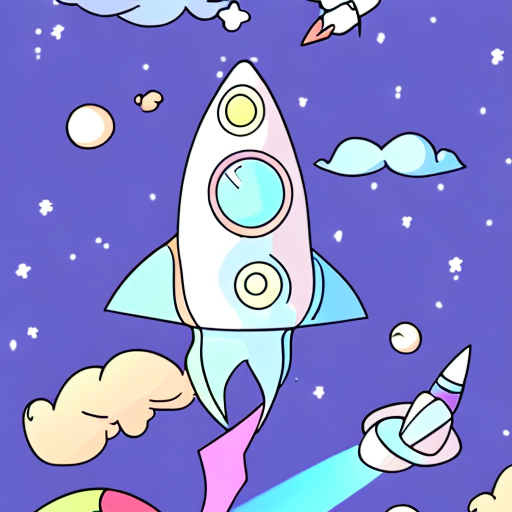

ghibli totoro in the style of Space Doodles

I LIKE IT

Download model [~2GB] or use it in a local install

You could just keep it in your Google Drive and then just attach a new

Stable Diffusion notebook to it as explained here:

[You would just need to copy the correct path].

OR

You can download your model checkpoint and use it in InvokeAI as I explained in

this article:

https://bits.jeremyschroeder.net/how_to_use_publicprompts-art_dreambooth_models_with_invokeai/

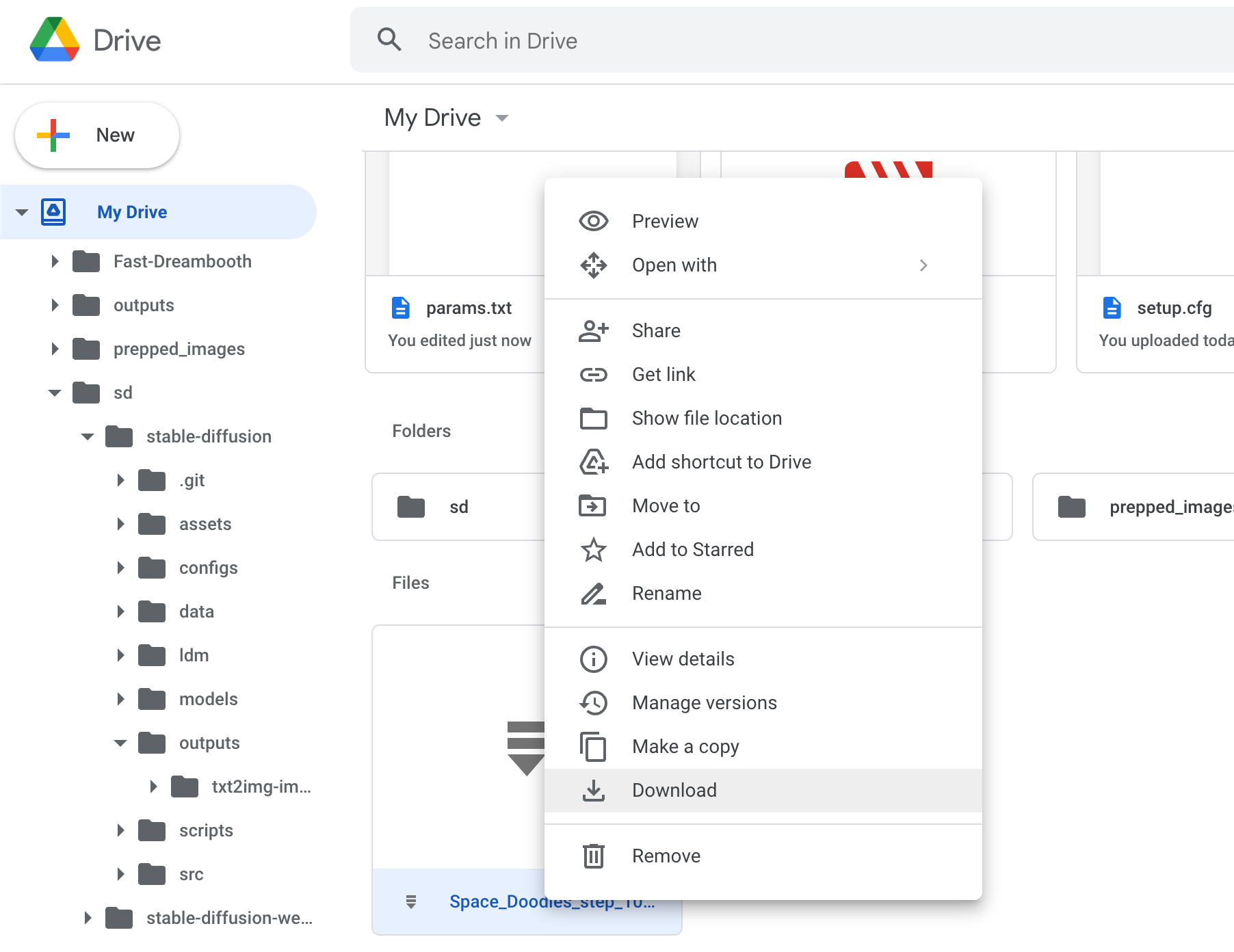

Grab your images

The new images are in sd/stable-diffusion/outputs/txt2img-images in your Gdrive.

Final Thoughts

You now have a tool to replicate art at will. Go have fun and make the world a better more fun place to be in.