How to use InvokeAI in developer mode to read in a list of prompts from a text file.

Updating my workflow

When you install InvokeAI there are different options. The easy mode "Automated installer" is recommended for most users. I recently re-installed InvokeAI and chose the "Automated installer" as I wanted to get rid of Miniforge on my laptop.

Docs for the install:

One thing I like doing is testing the different samples available with the same prompt and seed.

With the automated installed we need to change up how to pull in prompts from a text file.

Here is how you do it.

Like the linked post above, you will need a text file with a bunch of prompts

Create a file called prompts.txt in the root of your invokeai directory, and add your prompts.

Example of a prompts [just changing the sampler]:

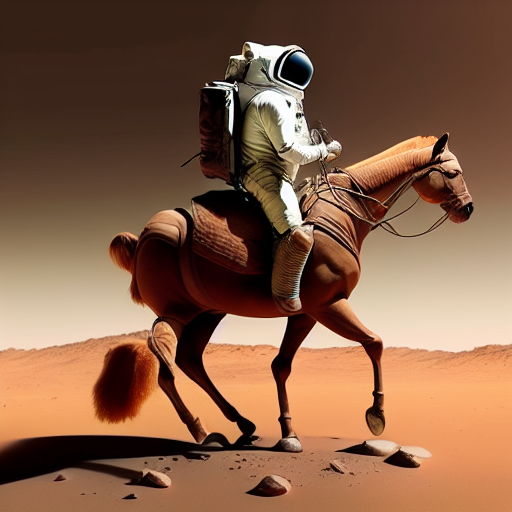

an astronaut riding a horse on Mars -C7.5 -Ak_euler_a -s25 -W512 -H512 -S1337

an astronaut riding a horse on Mars -C7.5 -Addim -s25 -W512 -H512 -S1337

an astronaut riding a horse on Mars -C7.5 -Ak_lms -s25 -W512 -H512 -S1337

Explanation of the arguments

These are the ones I mainly use, but you can find more arguments here:

-C is for cfg_scale: How hard to try to match the prompt to the generated image;

-A is for the sampler to use

-s is for how many steps of refinement to apply

-W is the image output width

-H is the image output height

-S is the seed number, I will often run the same seed number to see how a change in sampler or other argument will change my image

Developer Mode

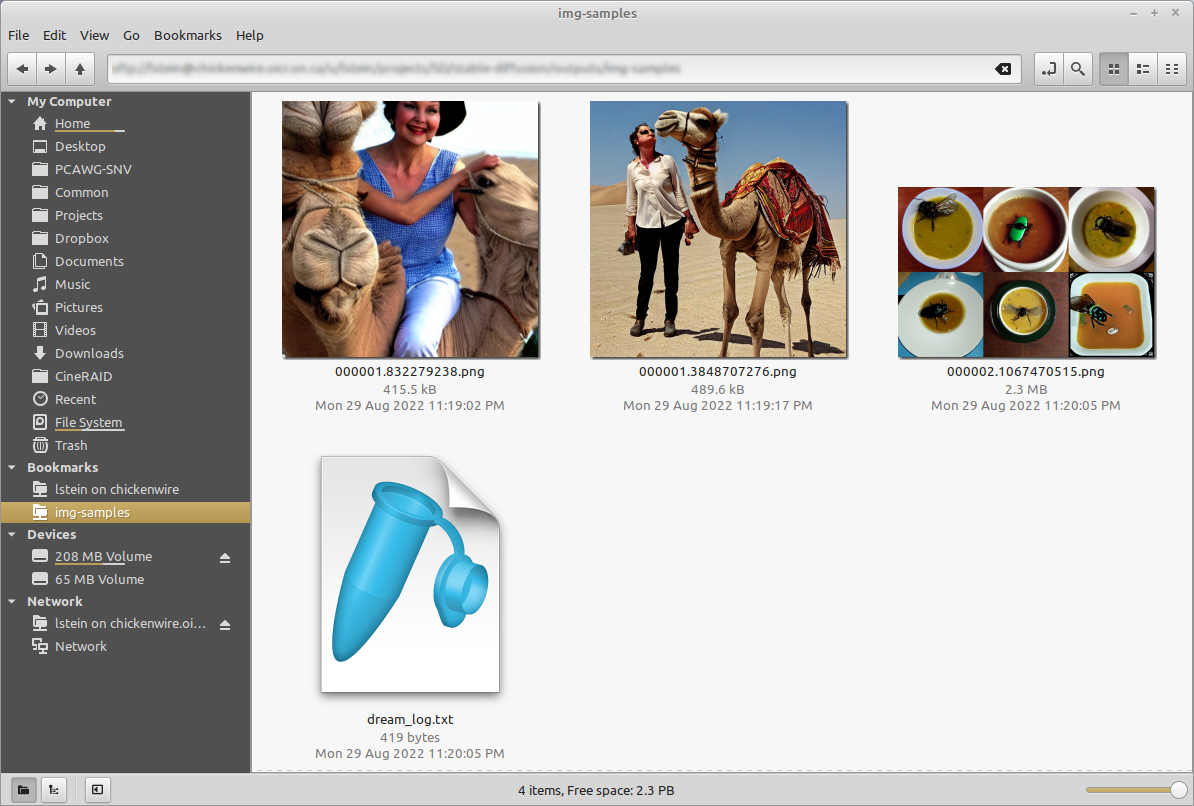

Start up invoke.

Get into developer mode by selecting option 3

Once in developer mode, the virtual environment is loaded up and ready to go.

Run it

If you have extra Stable Diffusion models loaded and you'd like to use it, then use the argument --model to point to your configured custom model.

Otherwise, you just need the --from_file to point to your prompts.txt file created earlier.

.venv/bin/python .venv/bin/invoke.py --from_file prompts.txt --model dreamlike

Note: I am testing the Dreamlike Diffusion model in this example, you can find it here on Hugginface.com

Example images from prompts above

As you can see just changing the sampler will give you different results, so you should really add this into your workflow. You never know what crazy images you may be missing out on.